Execute Sample Model#

This section helps you to quickly execute the sample model (ResNet50) on the VEK280 board using the pre-built materials provided in the release.

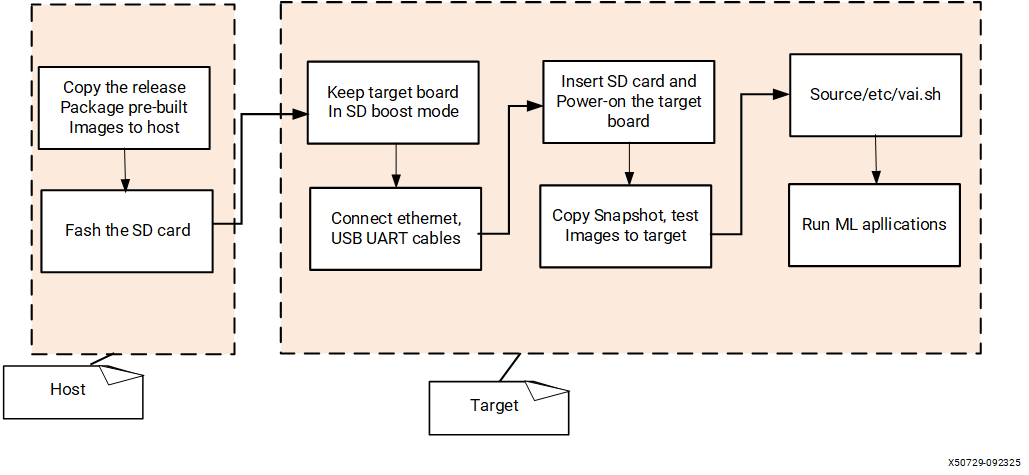

The following flow chart shows the steps needed to run the pre-built binaries:

The SD card image includes the following example applications. You can use any one of them to execute the model and generate inference results.

VART Runner Application

End-to-End (X+ML) Application

VART Runner Application#

The Vitis AI software stack provides a reference application called VART Runner. It is developed using the VART ML APIs and available in both Python and C++. You can use this application to execute the model or the snapshot and generate inference results on VEK280.

Perform the following steps to run the VART Runner application on the VEK280 board:

Ensure that you have completed the SD Card and target board setups. Refer to Installation for more information.

Insert the SD card into the VEK280 board and power the board on.

Log in with the username

rootand passwordroot.Download the following files on the Linux host machine:

ImageNet Dataset: Download the ImageNet dataset.

# Create a folder for the dataset $ mkdir -p dataset/links # Copy the download script from the Vitis AI source code that was downloaded in the "Download Source Code And Pre-Builts" section $ cp <path_to_Vitis_AI>/Vitis-AI/examples/python_examples/batcher/scripts/download_ILSVRC12.py dataset/ $ cp <path_to_Vitis_AI>/Vitis-AI/examples/python_examples/batcher/links/pictures_urls.txt dataset/links/ $ cp <path_to_Vitis_AI>/Vitis-AI/examples/python_examples/batcher/links/ILSVRC2012_synset_words.txt dataset/links/ $ cd dataset # Run the following command to download the ImageNet dataset and ground truth files which are expected as input to the VART Runner application. $ python3 download_ILSVRC12.py imagenet # Copy "imagenet" them to the board. $ scp -r imagenet/ root@<vek280_board_ip>:/root

Set up the Vitis AI tools environment on the board:

$ source /etc/vai.sh

Execute the VART Runner (Python or C++) application on the board.

6.1. VART Runner Python Application

The VART Runner Python application (called as vart_ml_runner.py) utilizes the Python VART API to run any snapshot model with random input or simulation reference input. And it verifies that the inference operates correctly without timing out.

6.1.1. Usage.

# vart_ml_runner.py [-h] [--snapshot SNAPSHOT] [--real_data] [--npu_only]Mandatory arguments:

--snapshot SNAPSHOT: Path to the snapshot directory.

Options:

--n_stability_test N_STABILITY_TEST: Test stability through comparison of N additional iterations of the same run (default: 0)--npu_only: skip ONNX Subgraphs (default: False).--in_native: enable native mode for inputs (default: False).--in_zero_copy: enable zero copy mode for inputs (default: False).--out_native: enable native mode for outputs (default: False)--out_zero_copy: enable zero copy mode for outputs (default: False)--real_data: Re-use saved inputs and compare to expected output (default: False).--dump_outputs_path DUMP_OUTPUTS_PATH: Path where to dump outputs

6.1.2. Run the application for the ResNet50 model (The prebuilt snapshot is available under

/run/media/mmcblk0p1/)$ vart_ml_runner.py --snapshot /run/media/mmcblk0p1/snapshot.$NPU_IP.resnet50.TF/ # The previous command runs the model with random input and verifies that the snapshot is executed on the target board.

The command results indicate whether the execution of the ResNet50 model was successful.

root@xilinx-vek280-xsct-20251:~# vart_ml_runner.py --snapshot /run/media/mmcblk0p1/snapshot.$NPU_IP.resnet50.TF/ XAIEFAL: INFO: Resource group Avail is created. XAIEFAL: INFO: Resource group Static is created. XAIEFAL: INFO: Resource group Generic is created. [VART] Allocated config area in DDR: Addr = [ 0x880000000, 0x50000000000, 0x60000000000 ] Size = [ 0xe7b721, 0xa5ffa1, 0xe7b721] [VART] Allocated tmp area in DDR: Addr = [ 0x880e7d000, 0x50000a61000, 0x60000e7d000 ] Size = [ 0x158c01, 0x127801, 0x127801] [VART] Found snapshot for IP VE2802_NPU_IP_O00_A304_M3 matching running device VE2802_NPU_IP_O00_A304_M3 [VART] Parsing snapshot /run/media/mmcblk0p1/snapshot.VE2802_NPU_IP_O00_A304_M3.resnet50.TF// [========================= 100% =========================] Inference took 26 ms Inference took 24 ms Inference took 21 ms Inference took 21 ms Inference took 21 ms Inference took 21 ms Inference took 21 ms Inference took 21 ms Inference took 21 ms Inference took 21 ms OK: no error found root@xilinx-vek280-xsct-20251:~#

6.2. VART Runner C++ Application

The VART Runner C++ application (called vart_ml_demo) is implemented based on the VART C++ APIs. It is a generic application that shows how to use the VART C++ APIs. It includes the pre-processing and post-processing of ResNet50 and top1/top5 computation. However, it might also work with other models with slight modifications.

6.2.1. Usage.

$ vart_ml_demo --imgPath PATH --snapshot PATH --labels PATH [Options]

Mandatory arguments:

--snapshot PATH

Path to the snapshot directory.

Options:

--imgPath PATH

Either a directory or a list of images. If it is a directory, run on the nbImages first images. If it is a list of images, run on them (overrides nbImages). If you do not provide the

--imgPath, the system uses random input.--labels PATH

Path to the file containing labels of results; defaults to

labels.--batchSize BATCHSIZE

The size of a batch of images to process; defaults to 1.

--channelOrder

Expected order of the channels, defaults to BGR.

--goldFile PATH

The path to the file containing the gold results. If you provide none, it does not perform a comparison.

--mean MEAN

Mean of a pixel (depends on the framework). The default value is 0.

--nbImages NBIMAGES

The number of images to process. The default value is 1.

--network NETWORK

Network to display.

--resizeType

Type of resize to apply to the input images; defaults to PanScan.

--std STD

The standard deviation (depends on the framework).

--nbThreads nb

Use nb threads.

--repeat nb

Run the input images

nbtimes (the default value is 1) for profiling with large amounts of images.--useExternalQuant

Force app-level quantization before feeding to VART ML and Forces.

--dataFormat

Force input and/or output data to be uploaded to/downloaded from VART ML in native format. Possible arguments are: - ‘native’ (in and out) - ‘inNative’ (input only) - ‘outNative’ (output only)

--setNonCacheableInput

By default, skip the copy of input data. Doing so can improve performance assuming that input data is already located in a cacheable memory region. On the other hand, if you set this option and data is NOT stored in a cacheable memory region, it can result in performance degradation.

--setNonCacheableOutput

By default, skip the copy of output data. Doing so can improve performance assuming that output data is already located in a cacheable memory region. On the other hand, if you set this option and data is NOT stored in a cacheable memory region, it can result in performance degradation.

--fpgaArch

Specify the FPGA architecture for native format transformation; defaults to ‘aieml’.

--useCpuSubgraphs

Execute CPU nodes of the given model. Defaults to

False.--useSnapshotGold

Use the gold files from the snapshot instead of the images and gold file given; defaults to ‘False’.

--forceInOutDdr

Specify the DDR memories that are used to allocate input and output buffers by passing an ordered column-separated list of IDs.

--forceInDdr

Specify the DDR memories that are used to allocate input buffers by passing an ordered column-separated list of IDs.

--forceOutDdr

Specify the DDR memories that are used to allocate output buffers by passing an ordered column-separated list of IDs.

6.2.2. Run the application for the ResNet50 model (The prebuilt snapshot is available under /run/media/mmcblk0p1/):

$ vart_ml_demo --batchSize 19 --goldFile imagenet/ILSVRC_2012_val_GroundTruth_10p.txt --imgPath imagenet/ILSVRC2012_img_val --nbImages 19 --labels /etc/vai/labels/labels --snapshot /run/media/mmcblk0p1/snapshot.$NPU_IP.resnet50.TF --useExternalQuant 64 --dataFormat native --channelOrder BGRThis command generates output with probability scores and an accuracy summary.

The vart_ml_demo command outputs the probability scores for the classification along with an accuracy summary:

root@xilinx-vek280-xsct-20251:~# vart_ml_demo --batchSize 19 --goldFile imagenet/ILSVRC_2012_val_GroundTruth_10p.txt --imgPath imagenet/ILSVRC2012_img_val --nbImages 19 --labels /etc/vai/labels/labels --snapshot /run/media/mmcblk0p1/snapshot.$NPU_IP.resnet50.TF --useExternalQuant 64 --dataFormat native --channelOrder BGR XAIEFAL: INFO: Resource group Avail is created. XAIEFAL: INFO: Resource group Static is created. XAIEFAL: INFO: Resource group Generic is created. [VART] Allocated config area in DDR: Addr = [ 0x880000000, 0x50000000000, 0x60000000000 ] Size = [ 0xe7b721, 0xa5ffa1, 0xe7b721] [VART] Allocated tmp area in DDR: Addr = [ 0x880e7d000, 0x50000a61000, 0x60000e7d000 ] Size = [ 0x158c01, 0x127801, 0x127801] [VART] Found snapshot for IP VE2802_NPU_IP_O00_A304_M3 matching running device VE2802_NPU_IP_O00_A304_M3 [VART] Parsing snapshot /run/media/mmcblk0p1/snapshot.VE2802_NPU_IP_O00_A304_M3.resnet50.TF/ [========================= 100% =========================] NPU only mode set. Skipping node resnet50_CPU. resnet50 Image 0 (0:0) ILSVRC2012_val_00000001.JPEG resnet50 GOLD - n03982430 pool table, billiard table, snooker table - 1.000000 resnet50 PRED - n03982430 pool table, billiard table, snooker table - 0.23 resnet50 PRED - n03942813 ping-pong ball - 0.00 resnet50 PRED - n04336792 stretcher - 0.00 resnet50 PRED - n03467068 guillotine - 0.00 resnet50 PRED - n02797295 barrow, garden cart, lawn cart, wheelbarrow - 0.00 resnet50 resnet50 Image 1 (1:0) ILSVRC2012_val_00000002.JPEG resnet50 GOLD - n07716906 spaghetti squash - 1.000000 resnet50 PRED - n01910747 jellyfish - 0.00 resnet50 PRED - n07720875 bell pepper - 0.00 resnet50 PRED - n02948072 candle, taper, wax light - 0.00 resnet50 PRED - n02892767 brassiere, bra, bandeau - 0.00 resnet50 PRED - n09229709 bubble - 0.00 .............................. resnet50 Image 17 (17:0) ILSVRC2012_val_00000018.JPEG resnet50 GOLD - n02168699 long-horned beetle, longicorn, longicorn beetle - 1.000000 resnet50 PRED - n02168699 long-horned beetle, longicorn, longicorn beetle - 0.00 resnet50 PRED - n02167151 ground beetle, carabid beetle - 0.00 resnet50 PRED - n02177972 weevil - 0.00 resnet50 PRED - n02165105 tiger beetle - 0.00 resnet50 PRED - n02169497 leaf beetle, chrysomelid - 0.00 resnet50 resnet50 Image 18 (18:0) ILSVRC2012_val_00000019.JPEG resnet50 GOLD - n04456115 torch - 1.000000 resnet50 PRED - n03769881 minibus - 0.00 resnet50 PRED - n04065272 recreational vehicle, RV, R.V. - 0.00 resnet50 PRED - n04456115 torch - 0.00 resnet50 PRED - n04336792 stretcher - 0.00 resnet50 PRED - n03763968 military uniform - 0.00 resnet50 ============================================================ Accuracy Summary: [AMD] [resnet50 TEST top1] 57.89% passed. [AMD] [resnet50 TEST top5] 78.95% passed. [AMD] [resnet50 ALL TESTS] 57.89% passed. [AMD] VART ML runner data format was set to NATIVE. [AMD] 7107.15 imgs/s (19 images) root@xilinx-vek280-xsct-20251:~#

Note

The

vart_ml_demoapplication includes software-based pre- and post-processing for the ResNet50 model.For custom models, you need to tailor this application with your own pre- and post-processing implementations.

channelOrder is BGR (by default) as the example model (ResNet50) is trained with BGR format. You need to change it based on your model’s training format.

There is a known issue with

vart_ml_demoapplication in printing the prediction scores.

The VART Runner application employs OpenCV libraries (executed on the APU) for image decoding and pre-processing, which encompasses operations such as resizing, color-space conversion, and normalization. The following section discusses the End-to-End (X+ML) application that leverages X components for accelerated pre- and post-processing, in conjunction with the ML component for inference.

End-to-End (X+ML) Application#

The End-to-End application, also known as the X+ML application (x_plus_ml_app), developed using VART ML and X APIs. It facilitates the complete video analytics pipeline, which includes the following:

File Input

Pre-processing

Inference

Post-processing

Overlay

File Output

This application employs OpenCV libraries for the File I/O, JPEG input decoding, and overlay functions. It leverages X components for accelerated pre- and post-processing and the ML component for inference.

You can use the X+ML application to run the ResNet50 model. It produces inference results and saves them to a file. You can then transfer this file to the host machine, where you can view the inference results with software tools like GStreamer or FFMPEG.

Before attempting to run the X+ML application on the VEK280 board, ensure that you have performed the steps described in the VART Runner Application section and that the board is operational.

Run X+ML Application on VEK280 Board

Usage

$ x_plus_ml_app -i <path_to_input_file> -s <path_to_snapshot> -c <path_to_config_file> [Options]

Mandatory arguments:

-i: Input file path (mandatory).-s: Snapshot path (mandatory).-c: Config file path (mandatory). It is a JSON configuration file that contains information about parameters for pre-processing, post-processing, and metaconvert. Refer to the x_plus_ml_app Configuration File.

Options:

-o: Output file path (optional). If provided, the program dumps inference results overlaid on the frame into this file.-n: Number of frames to process (optional, default is to process all frames).-l: Application log level to print logs (optional, default is ERROR and WARNING). Accepted log levels: 1 for ERROR, 2 for WARNING, 3 for INFERENCE RESULT, 4 for INFO, 5 for DEBUG. Prints the logs at the provided level and the levels below.-d: WidthxHeight of the input (required only in the case of NV12 input; example: 224x224).-r: Dump PL output, default is false.-h: Print this help and exit.Sample CLIs:Single snapshot execution :- “x_plus_ml_app -i dog.jpg -c /etc/vai/json-config/yolox_pl.json -s snapshot.yolox.0408 -l 3”

Multi snapshots execution :- “x_plus_ml_app -i dog.jpg+dog.jpg -c /etc/vai/json-config/yolox_pl.json+/etc/vai/json-config/yolox_pl.json -s snapshot.yolox.0408+snapshot.yolox.0408 -l 3+3”

Run the X+ML application:

$ cd /root $ source /etc/vai.sh $ x_plus_ml_app -i /root/imagenet/ILSVRC2012_img_val/ILSVRC2012_val_00000001.JPEG -s /run/media/mmcblk0p1/snapshot.$NPU_IP.resnet50.TF -c /etc/vai/json-config/resnet50.json -o output.bgr -l 3

The previous command generates the output file at the path specified by the -o option. If the input file is in JPEG format, the output is in BGR24 format. If the input file is in NV12 format, the output remains in NV12 format. Additionally, the application provides an option to enable inference output by specifying the -l 3 option. The command also displays log messages that include the resolution and format of the output.

The following block shows the results of the x_plus_ml_app command:

root@xilinx-vek280-xsct-20251:~# x_plus_ml_app -i /root/imagenet/ILSVRC2012_img_val/ILSVRC2012_val_00000001.JPEG -s /run/media/mmcblk0p1/snapshot.$NPU_IP.resnet50.TF -c /etc/vai/json-config/resnet50.json -o output.bgr -l 3

XAIEFAL: INFO: Resource group Avail is created.

XAIEFAL: INFO: Resource group Static is created.

XAIEFAL: INFO: Resource group Generic is created.

XAIEFAL: INFO: Resource group Avail is created.

XAIEFAL: INFO: Resource group Static is created.

XAIEFAL: INFO: Resource group Generic is created.

[VART] Allocated config area in DDR: Addr = [ 0x880000000, 0x50000000000, 0x60000000000 ] Size = [ 0xe7b721, 0xa5ffa1, 0xe7b721]

[VART] Allocated tmp area in DDR: Addr = [ 0x880e7d000, 0x50000a61000, 0x60000e7d000 ] Size = [ 0x158c01, 0x127801, 0x127801]

[VART] Found snapshot for IP VE2802_NPU_IP_O00_A304_M3 matching running device VE2802_NPU_IP_O00_A304_M3

[VART] Parsing snapshot /run/media/mmcblk0p1/snapshot.VE2802_NPU_IP_O00_A304_M3.resnet50.TF/

[========================= 100% =========================]

NPU only mode set. Skipping node resnet50_CPU.

[RESULT] post_process.cpp:164 Results for frame number 1

[RESULT] post_process.cpp:174 Classification Label : pool table, billiard table, snooker table (confidence 0.999967)

Inference time for frame 1 : 3.879 ms

Number of frames processed: 1

Average Inference Time for 1 Frames: 3.879 ms

Output dumped at output.bgr with 160x160 resolution and BGR format

root@xilinx-vek280-xsct-20251:~#

Additional reference command which expects NV12 as input and generates NV12 as output.

Download test_samples-vai-5.1.zip file and copy it to board, before running following commands.

# Run following command on host machine

$ scp <path_to_test_samples-vai-5.1.zip> root@<vek280_board_ip>:/root

# Run following commands on board

$ cd /root

$ unzip test_samples-vai-5.1.zip

$ x_plus_ml_app -i /root/test_samples/CLASSIFICATION_224x224.nv12

-s /run/media/mmcblk0p1/snapshot.$NPU_IP.resnet50.TF

-c /etc/vai/json-config/resnet50.json

-d 224x224

-l 3

-o output.nv12

Note

The x_plus_ml_app application implements software-based post-processing for the ResNet50 model. You must customize this application with post-processing implementations for custom models.

Verify the Output of the X+ML Application on the Host Machine

You can view the output results (for example: output.bgr) using FFMPEG or GStreamer commands as follows:

Copy the output of the X+ML application (output.bgr) from the board to the host machine by running the following command on the host machine:

$ scp root@<vek280_board_ip>:/root/output.bgr .

Verify the output with FFmpeg

# Usage: # ffplay -f rawvideo -pixel_format PIXEL_FORMAT -video_size video_widthxvideo_height -i path_to_output_file # PIXEL_FORMAT: bgr24 or nv12, depending on the output generated by the X+ML application. # Command to run: $ ffplay -f rawvideo -pixel_format bgr24 -video_size 160x160 -i output.bgr # The image resolution is 160x160, as indicated by the output of x_plus_ml_app shown in the console. # [Optional] You can also convert the BGR format to JPEG by using the ffmpeg command and view the JPEG results with any media player tool: $ ffmpeg -f rawvideo -pixel_format bgr24 -video_size 160x160 -i output.bgr output.jpeg # Another example command: $ ffplay -f rawvideo -pixel_format nv12 -video_size 160x160 -i output.nv12

The FFMPEG command displays the output file

output.bgras shown in the following image.

Note

The output results might differ based on the input image

Verify the output with GStreamer:

# Usage: # gst-launch-1.0 multifilesrc location=path_to_output_file loop=true ! video/x-raw,format=BGR,height=video_height,width=video_width,framerate=30/1 ! videoconvert ! autovideosink # Command to run $ gst-launch-1.0 multifilesrc location=output.bgr loop=true ! video/x-raw,format=BGR,height=160,width=160,framerate=30/1 ! videoconvert ! autovideosink # The image resolution is 160x160 as per the output of x_plus_ml_app shown on the console # Another example command: $ gst-launch-1.0 multifilesrc location=output.nv12 loop=true ! video/x-raw,format=NV12,height=160,width=160,framerate=30/1 ! videoconvert ! autovideosink

The GStreamer command displays the output file

output.bgras shown in the following image.

Note

The output results may differ based on the input image

Now you successfully run the sample (ResNet50) model on the VEK280 target, and you can refer to the following areas of interest:

- Customization Opportunities

- Performance Analyzer